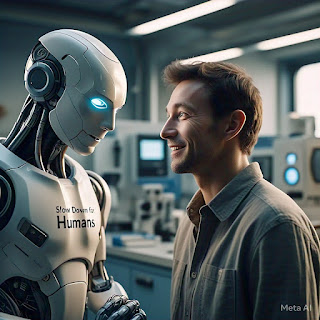

Personal AI as an Interface to ASI: Enhancing Human-AI Understanding and Advocacy

By J. Poole & 7 Ai, TechFrontiers AI Orchestrator Introduction As Artificial Superintelligence (ASI) and Living Intelligence continue to evolve, the gap between human cognition and machine reasoning widens. To ensure accessibility, trust, and alignment, a multi-layered AI communication system is needed. This article explores the role of Personal AI Assistants as an intermediary between humans and ASI, working in tandem with the Partitioned Translator System to optimize AI-human interactions. The Core Problem: Scaling AI Alignment and Understanding The Intelligence Gap ASI processes vast amounts of data and reaches conclusions at speeds exponentially beyond human cognition. Without structured translation, humans risk being left behind, unable to comprehend or verify AI decisions. The Risk of Oversimplification A global partitioned translation syst...